FABLESS:

The Transformation of The

Semiconductor Industry

DANIEL NENNI

PAUL MCLELLAN

WITH FOREWORD BY CLIFF HOU

VP OF R&D, TSMC

A SEMIWIKI.COM PROJECT

Fabless: The Transformation of the Semiconductor Industry

Copyright 2019 by SemiWiki.com LLC. All rights reserved. Printed in the United

States of America. Except as permitted under the United States Copyright Act of 1976,

no part of this publication may be reproduced or distributed in any form or by any

means or stored in a database or retrieval system without the prior written consent of

the publisher.

Authors: Daniel Nenni and Paul McLellan

Editors: Beth Martin and Amanda Ketchum

ISBN-13: 978-1497525047

ISBN-10: 1497525047

BISAC: Business & Economics / General

3

4

Table of Contents

Introduction ...................................................................................................................... 7

Foreword............................................................................................................................ 9

Preface .............................................................................................................................. 11

Chapter 1: The Semiconductor Century ..................................................................... 15

Chapter 2: The ASIC Business ..................................................................................... 27

In Their Own Words: VLSI Technology ............................................................................ 35

In Their Own Words: eSilicon Corporation ....................................................................... 43

2019 Update: eSilicon Corporation ...................................................................................... 51

Chapter 3: The FPGA .................................................................................................... 57

Update 2019: FPGA Landscape ........................................................................................... 65

In Their Own Words: Xilinx ................................................................................................. 69

In Their Own Words: Achronix ........................................................................................... 83

Chapter 4: Moving to the Fabless Model .................................................................... 95

In Their Own Words: Chips and Technologies ............................................................... 101

Chapter 5: The Rise of the Foundry .......................................................................... 105

2019 Update: Foundries ....................................................................................................... 113

In Their Own Words: TSMC and Open Innovation Platform ..................................... 121

2019 Update: TSMC ............................................................................................................. 133

2019 Update: Dr. Morris Chang ......................................................................................... 147

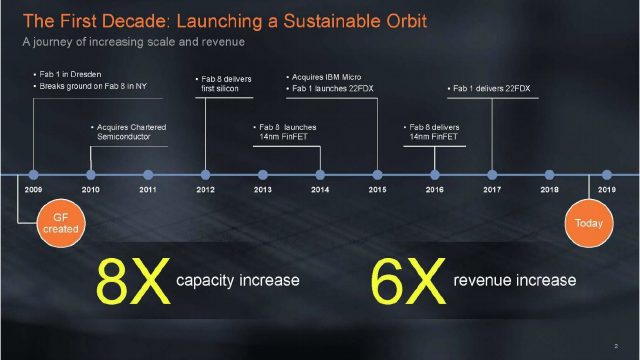

In Their Own Words: GLOBALFOUNDRIES ..................................................... 149

2019 Update: GLOBALFOUNDRIES ............................................................................. 159

Chapter 6: Electronic Design Automation ............................................................... 169

2019 Update: EDA ............................................................................................................... 181

In Their Own Words: Mentor Graphics ........................................................................... 185

2019 Update: Mentor Graphics .......................................................................................... 201

In Their Own Words: Cadence Design Systems .............................................................. 205

2019 Update: Cadence .......................................................................................................... 221

5

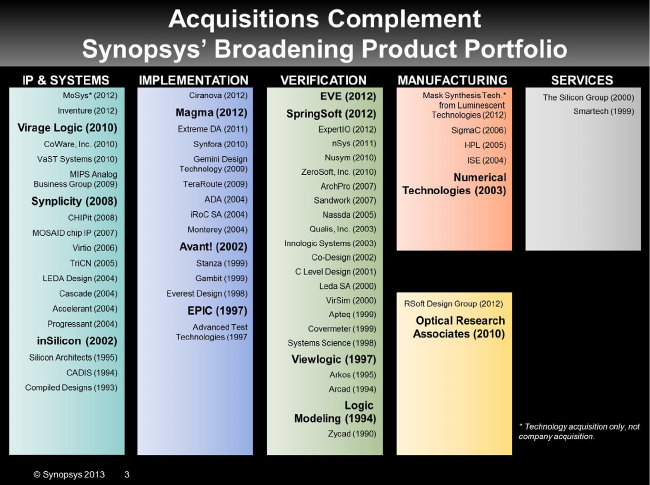

In Their Own Words: Synopsys ......................................................................................... 227

2019 Update: Synopsys ......................................................................................................... 243

Chapter 7: Intellectual Property ................................................................................. 249

2019 Update: Semiconductor IP ......................................................................................... 257

2019 Update: In Their Own Words: ARM ....................................................................... 261

In Their Own Words: Imagination Technologies............................................................ 271

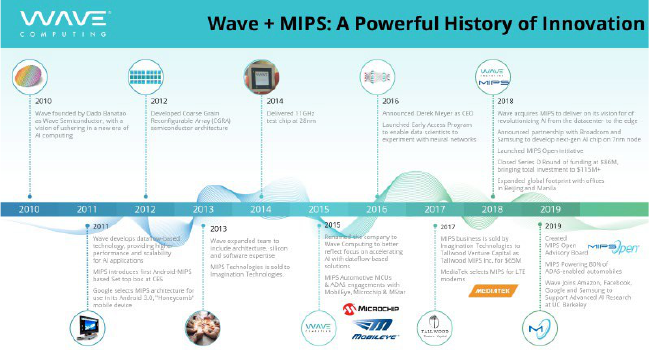

2019 Update: Wave Computing and MIPS Technologies .............................................. 285

2019 Update: IP Management ............................................................................................. 291

In Their Own Words: Methodics ....................................................................................... 295

Chapter 8: What’s Next for the Semiconductor Industry? ..................................... 299

6

7

Introduction

Paul McLellan, Daniel Payne, and I have more than 100 years of

combined experience in the semiconductor industry. We started sharing

our observations, opinions, and experiences when semiconductor blogging

was just getting started. Many semiconductor bloggers followed, peaking at

more than 200 in 2010. We literally brought blogging to the semiconductor

industry, which was very disruptive at the time. Blogging is hard work and

only the company (employee) bloggers would survive without independent

blogger compensation. In 2011 Paul, Daniel, and I joined our blogs

together to create a crowdsourcing platform (SemiWiki.com) to not only

appeal to a wider audience, but to also get compensated for our efforts.

At first we were chastised for pretending to be journalists, in fact we were

not allowed press passes or access to press rooms at conferences. The tide

turned, of course, and now blogging is the media mainstay for all industries

including semiconductors. Don’t be fooled by fancy executive editor titles,

the majority of the news today is written by people like us who share

observations, experience, and opinions. The difference of course is that

most mainstream semiconductor bloggers do not have deep semiconductor

experience like the SemiWiki contributors.

Dozens of people have blogged on SemiWiki and more than three

million people have visited. SemiWiki has published more than five

thousand blogs since 2011 garnering more than thirty-three million views.

The result is a trove of content and analytics of who reads what, when,

where, how, and why. Several of the regular SemiWiki bloggers have

8

launched off into bigger and better things but most are still here to stay

active in the industry that we all love.

On June 1

st

, 2019 we launched SemiWiki 2.0 which includes an IP

Enablement Portal. We will talk about this more in the Semiconductor IP

Chapter update so keep reading. It will be another SemiWiki disruption and

we hope you will be part of it, absolutely.

SemiWiki has also published seven books, with more planned. This

particular book started it all when Paul McLellan, Beth Martin, and I

decided to document the history of the fabless semiconductor industry as

published on SemiWiki.com. It was a labor of love since we posted a free

PDF version and have given away thousands of print copies over the last

six years.

A lot has happened in the semiconductor ecosystem since we first

published in 2013 so we decided to do a revised edition. It has grown more

than 50 pages and includes updates from eSilicon, Synopsys, Mentor

Siemens, Cadence, ARM, and new "In Their Own Words" entries from

Achronix, Methodics, and Wave/MIPS. Also included are industry updates

on: FPGA, Foundry, EDA, IP, TSMC, GLOBALFOUNDRIES,

and a new subchapter on IP Management. Most importantly there is a

NEW chapter 8: “What’s Next for the Semiconductor Industry” written by

EDA icon Dr. Walden Rhines. Thank you again for reading and I hope to

see you on www.SemiWiki.com.

Daniel Nenni

CEO, Founder, SemiWiki.com LLC June 2019

9

Foreword

Dr. Cliff Hou, Vice President, Research and Development, TSMC

Semiconductor innovation has the power to change the world. Although,

well over half a century ago, when semiconductors first came into being,

few people really saw that promise. That power of semiconductors to

innovate has stretched beyond its original applications. It also has changed

how semiconductors are manufactured.

Over the first 30 years of its existence, the semiconductor industry

followed the proven integrated manufacturing model of the time. Those

companies who owned the manufacturing assets made, marketed,

researched and developed their own products. But then, the dynamics of

innovation mingled with laws of supply and demand and a new concept—

outsourcing—emerged and gave birth to what is known today as the

dedicated foundry model, and the world has never been the same.

Dr. Morris Chang is credited with identifying the innovation need and

providing the resources to meet it. The need was making available

manufacturing resources that are 100 percent dedicated to those emerging

semiconductor companies that lacked the financial wherewithal to own

their own expensive equipment. Like all great ideas, the premise was simple.

What no one foresaw is that it would give rise to two, if not three, new

industry segments, all of which contribute greatly to the innovative spirit

of the industry today.

10

When Dr. Chang established the Taiwan Semiconductor Manufacturing

Company (TSMC) in 1987, the foundry segment and the fabless

semiconductor model were born. Today, fabless semiconductor

companies—those companies who do not own manufacturing

resources—are the fountainhead of innovation that is the foundation for

our electronic world. The foundry segment has allowed these companies to

invest in design and innovation rather than in manufacturing. As a result,

innovation and the world economy have raced forward at an

unprecedented pace. This has given nearly every semiconductor company

the flexibility to innovate widely and creatively, constantly expanding the

universe of products we rely upon today.

Equally remarkable has been the rise of a powerful design ecosystem to

complement the fabless industry. The ecosystem works in unison with

designers and foundries to ensure that the IP, design tools, and services

needed to get next-generation designs taped-out and in production are

proven and ready to help customers meet their time-to-market goals.

Today, the emergence of the fabless model, the dedicated foundry industry

segment and an independent design ecosystem are driving the mobile

revolution and will be the foundation of the internet-of-things.

Even as this book was being written, the semiconductor industry

continued to evolve. The drive to integrate the design and manufacturing

links in the semiconductor value chain is now being extended downstream (to

manufacturing equipment and materials suppliers) and upstream to major

product companies. This is taking on the power of integration— virtual

integration. Virtual integration is, by definition, the power of collaboration

that blazes the direction and vision for the next generation of innovation.

Innovation will always be the hallmark of the semiconductor industry

and it is the theme that runs through this book. I’m honored and humbled

to be part of this exciting industry and equally honored and humbled to

offer my comments as the introduction to this book.

Dr. Cliff Hou, January 2014

11

Preface

The purpose of this book is to illustrate the magnificence of the fabless

semiconductor ecosystem, and to give credit where credit is due.

We trace the history of the semiconductor industry from both a technical

and business perspective. We argue that the development of the fabless

business model was a key enabler of the growth in semiconductors since the

mid-1980s. Because business models, as much as the technology, are what

keep us thrilled with new gadgets year after year, we focus on the evolution

of the electronics business.

We also invited key players in the industry to contribute chapters. These

“In Their Own Words” chapters allow the heavyweights of the industry to

tell their corporate history for themselves, focusing on the industry

developments (both in technology and business models) that made them

successful, and how they in turn drive the further evolution of the

semiconductor industry.

Before we dive in, let’s define some terms. Rather than electronics, which

refers to whole devices like your cell phone or TV, we’ll be using the terms

chip, IC, ASIC, SoC, and FPGA throughout the book as we focus on the

components that go into the devices. Chip or IC can refer more broadly to

the two main types of semiconductor devices we cover: ASICs and SoCs

(systems-on-chip), and FPGAs (field-programmable gate arrays). We have

chosen not to cover many other electronic components including memory,

flash, mixed-signal technology, and micro-electro-mechanical systems

(MEMS).

12

We also talk about several phases of development in the semiconductor

industry, and use the following terms to describe the companies and

technologies that define a particular business model.

IC: An integrated circuit, also called a chip, is a set of electronic circuits,

including transistors and other components, on a silicon substrate.

Systems company: A systems company makes a consumer product

from chips that other companies have designed. Examples include Cisco

and Apple.

Semiconductor company: Also called integrated device manufacturer

(IDM), these companies, like Intel and Samsung, design and manufacture

standard ICs that systems companies use in their products. Until the mid-

1980s, all semiconductor companies were IDMs, that is, they controlled

both the design and manufacture of their chips. This changed gradually,

and now there are only a few (Intel and Samsung notably). All other chip

makers outsource the manufacturing of their designs to a foundry.

ASIC: Application specific integrated circuit refers to two things: a chip

that is custom designed for a specific application, rather than for a general-

purpose application, and to the type of company that developed in the

1980s that performed the physical design and manufacturing of these

application-specific ICs for other semiconductor or systems companies.

“ASIC” is now commonly used interchangeably with “IC.”

SoC: A system-on-chip is an IC that integrates all components of a

computer or other electronic system into a single chip. It may contain

digital, analog, mixed-signal, and often radio-frequency functions—all on a

single chip substrate.

Fabless company: A company that designs their own chip but

outsources the manufacturing to a third-party, either a pure-play foundry

or an IDM that sells excess fab capacity. This is the prevailing business

model today.

EDA: Electronic design automation companies make the software that

is used to design all modern semiconductor devices. The three dominant

13

EDA companies today are Synopsys, Cadence Design Systems, and Mentor

Graphics.

IP: Semiconductor intellectual property companies sell chip designs that

are implemented in their customer’s ASICs, SoCs, or other semiconductor

devices. A useful metaphor is that rather than selling a complete house, IP

companies sell you the blueprint. The best-known IP company is ARM.

Foundry: A business that is a dedicated semiconductor fabrication

facility that does not design its own ICs. The term “fab” refers to any

semiconductor fabrication plant, whether run as part of an IDM (like Intel)

or as a foundry (like TSMC).

The economics of designing a chip and getting it manufactured is similar

to how the pharmaceutical industry gets a new drug to market. Getting to

the stage that a drug can be shipped to your local pharmacy is enormously

expensive. But once it’s done, you have something that can be

manufactured for a few cents and sold for, perhaps, ten dollars. ICs are like

that, although for different reasons. Getting an IC designed and

manufactured is incredibly expensive, but then you have something that

can be manufactured for a few dollars, and put into products that can be

sold for hundreds of dollars. One way to look at it is that the first IC costs

many millions of dollars—you only make a lot of money if you sell a lot of

them.

What we hope you learn from this book is that even though IC-based

electronics are cheap and pervasive, they are not cheap or easy to make. It

takes teams of hundreds of design engineers to design an IC, and a complex

ecosystem of software, components, and services to make it happen. The

fabs that physically manufacture the ICs cost more to build than a nuclear

power plant. Yet year after year, for 40 years, the cost per transistor has

decreased in a steady and predictable curve. There are many reasons for

this cost reduction, and we argue that the fabless semiconductor business

model is among the most important of those reasons over the past three

decades.

14

The next chapter is an introduction to the history of the semiconductor

industry, including the invention of the basic building block of all modern

digital devices, the transistor, the invention of the integrated circuit, and the

businesses that developed around them.

15

Chapter 1: The Semiconductor

Century

Although the technology behind our electronic devices is largely hidden

from sight, its influence on our daily lives, our health, our economy, and our

entertainment is undeniable. Today, digital electronics are ubiquitous and

indispensable to the daily life of modern people. But it wasn’t always so.

Two big things happened to bring consumer electronics into every

household: the invention of the transistor in 1947, and the invention of the

integrated circuit (IC) in 1959. Then, lots of little things happened to make

ICs small and cheap enough to occupy nearly every aspect of our lives.

For the average western child in the 1950s and 1960s, the only electronics

in the household were the radio and the television, both of which contained

tubes (valves in some countries) not digital semiconductor technology. The

only widespread electronic product was the transistor radio, which you

could buy for roughly $20 ($150 in 2013 dollars).

In the 1970s, kids still watched analog TVs, but all radios were transistor

based and you could buy a pocket calculator (for about $160 in 2013

dollars), an early PC, digital watches, and an Atari game console. A kid in

the 1980s would also have a Walkman, a CD player, a VCR, video camera,

boom box, an electric typewriter, and maybe an actual IBM PC. Anyone

born after 1990 will probably not remember a time without cell phones,

flat panel TVs, GameBoys, laptops, and tablets. Electronics are now

16

incorporated into nearly everything from home thermostats and

toothbrushes, to cars and medical devices.

Today, an iPad has more processing power than a Cray supercomputer in

1990, which was the size of a refrigerator. Our cars contain dozens of

microprocessors. We shop online. We read books on tablets. We play video

games on consoles that are more powerful than the flight simulators of

twenty years ago. We’ll let futurists predict what electronics a child born in

2013 might never live without. It’s been a steep curve up and to the right

for the number and types of electronic devices we encounter daily.

The Invention of the Transistor and the Integrated Circuit

The transistor, which is just a switch that controls the flow of electrical

current in a computer chip, is at the heart of almost all electronics. This

makes it among the most important inventions of the 20th century. It was

invented at Bell Labs in New Jersey in 1947 by John Bardeen, Walter

Brattain, and William Shockley. Shockley then left Bell Labs and returned

to Palo Alto, CA, where he had been brought up. He opened Shockley

Semiconductor Laboratory as a division of Beckman Instruments, and tried

to lure ex-colleagues from Bell Labs to join him. When he was unsuccessful,

he searched universities for the brightest young graduates to build the new

company. This was truly the genesis of Silicon Valley and some of its culture

that still exists today. Shockley is credited with bringing the silicon to Silicon

Valley.

“What we didn’t realize then was that the

integrated circuit would reduce the cost of electronic

functions by a factor of a million to one, nothing

had ever done that for anything before” -Jack Kilby

Shockley’s management style was abrasive, and he alienated many who

worked for him. The final straw came when Shockley decided to

discontinue research into silicon-based transistors. Eight people, known as

the “traitorous eight,” resigned and with seed money from Fairchild

17

Camera and Instrument they created Fairchild Semiconductor Company.

Almost all semiconductor companies, notably Intel, AMD, and National

Semiconductor (now part of Texas Instruments) have their roots in

Fairchild in one way or another. For this reason, they were referred to as

“Fairchildren.” These companies drove the development of silicon-based

integrated circuits. Silicon wasn’t the only material in play for making

transistors, but it turned out to be the winning technology.

The next key invention came in 1959 from Jean Hoerni at Fairchild when

he created the “planar” manufacturing process, which flattened the

transistor and allowed it to be mass-produced. The same year, Jack Kilby

at Texas Instruments and Robert Noyce at Fairchild developed the

integrated circuit. The IC connected diodes, transistors, resistors, and

capacitors on a single silicon chip. Kilby and Noyce both received the

National Medal of Science, and Kilby received the Nobel Prize for the work

in 2000 (Noyce died in 1990).

The integrated circuit turned out to be the big breakthrough. Until that

point, transistors were built one at a time and wired together manually

using “flying-wire” connections. The planar manufacturing process allowed

multiple transistors to be created simultaneously and connected together

simultaneously. By 1962, Fairchild was producing integrated circuits with

about a dozen transistors. Much has changed in the intervening years, but

we use the same basic principle to build modern billion-transistor chips.

Those two inventions, the transistor and then the integrated circuit, are the

key to electronics today and all the ways in which electronics affects our

lives.

Moore’s Law

“The whole point of integrated circuits is to absorb the functions of

what previously were discrete electronic components, to incorporate

them in a single new chip, and then to give them back for free, or at

least for a lot less money than what they cost as individual parts.

18

Thus, semiconductor technology eats everything, and people who

oppose it get trampled.” -Gordon Moore

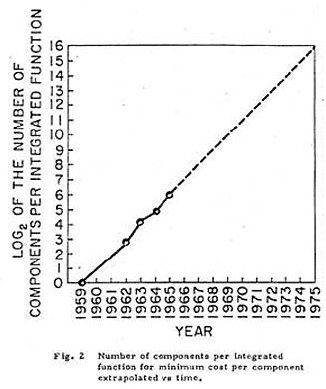

In 1965, Gordon Moore was the head of research and development at

Fairchild. Moore noticed that the number of transistors on the integrated

circuits that Fairchild was building seemed to double every two years, as

shown in the graph from Moore’s original 1965 article in Electronics (vol.

38, number 8) titled, “Cramming More Components onto Integrated

Circuits.”

Moore’s original graph. It predicts a steady rise in the number

of transistors on a chip.

As he pointed out there, “Integrated circuits will lead to such wonders as

home computers, automatic controls for automobiles, and personal

portable communications equipment.”

Remember that this was 1965, when an integrated circuit contained

64 transistors. This was an extraordinary prediction. And he was right;

we do have home computers, automatic controls for automobiles (not

quite fully automatic yet), and personal portable communications

19

equipment also known as cellphones. His prediction differed from

popular science fiction assumptions about future technology because it

was based on observed facts about the trajectory of computational capacity.

Notice that he did not predict flying cars or unlimited power sources, two

technologies that were assumed to be inevitable by mid-century futurists.

Surprisingly, nearly 50 years after Moore made his observation,

semiconductors seem still to be increasing in complexity at this rate.

Gordon Moore’s original prediction is now known as “Moore’s Law.”

However, it is possible to look at Moore’s Law another way: the cost of

any given functionality implemented in electronics halves every two years

or so. Over a period of twenty years, this is a thousand-fold reduction. A

modern video-game console has far more computing power and much

better graphics than the highest-end flight simulators of the 1970s. Every

ink-jet printer has far more computing power than NASA had at its

disposal for getting to the moon.

It is this exponential reduction of electronic costs that has transformed

so many aspects of our lives in the last twenty years or so since integrated

circuits became cheap enough to go into consumer electronic products.

Because of this fast growth in semiconductor technology, we have certain

expectations about electronics that we don’t have for anything else. We

don’t expect our cars to cost half as much or get double the gas-mileage

every few years. Intel made another comparison: if the airline industry

obeyed Moore’s law, a flight from New York to Paris taking seven hours

and costing $900 in 1978 would have taken a second and cost a penny in

2005.

How ICs are Made

The process of designing and manufacturing an IC can seem abstract. In

fact it is complex, but not unfathomable. The design of ICs used to be a

manual task, but is accomplished now with the help of specialized software.

That aspect will be covered later. The basic manufacturing technique has

evolved from the original planar process, in which ICs are built up in layers

20

on top of a disc of silicon called a wafer. A modern wafer is 12” in diameter

(300 mm) with an area of roughly 70,000 sq. mm, about the size of a dinner

plate. If the ICs are small, say 1 mm on each side, the wafer will hold 70,000

of them. If you’re making giant ICs, say 20x20 mm, you can fit only 148 on

a wafer. The ICs on a wafer are called die. Die is used as both singular and

plural in the semiconductor industry.

Starting with the bare silicon wafer, layers of different materials—

semiconductor, metal, and dielectrics—are deposited one at a time. The

layers that define the transistors are created first, then all the transistors are

created. Next, the layers of metal are deposited and then etched with

chemicals to define them into the wires that hook all the transistors

together and to supply power from outside the chip (from the battery in

your cellphone, for example).

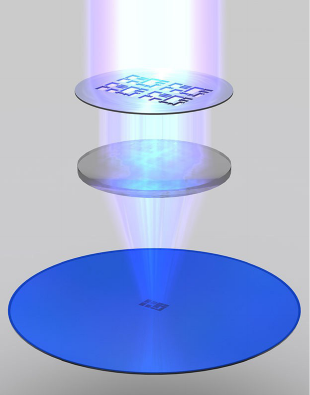

Light shines through a reticle, which acts as a stencil to create

patterns on the wafer. Illustration courtesy of Intel.

The key feature of the production process is that all the transistors on all

the die on the wafer are created simultaneously, and each layer of metal is

created simultaneously across the whole wafer. It is this incredible level of

21

efficiency, making trillions of transistors at once, that has allowed the price

of electronic products to fall by around 5% per month, year after year.

The manufacturing process is based on a photographic process known

as photolithography in which each die is exposed to light through a mask

(more correctly called a reticle). The reticles are usually the negative image

of all the components of the integrated circuit. A machine called a stepper

exposes each die one at a time to a flash of light from a laser through the

reticle, and then steps over to the next die until the whole wafer has been

exposed. The photographic process captures the mask pattern on

photoresist, a wafer coating whose chemical properties are modified by

exposure to the light source through the reticle. The wafer is then

developed, resulting in the corresponding reticle pattern in photoresist at

each die location.

The huge gain in efficiency comes after the stepper is done stamping the

pattern onto each die. That’s when the entire wafer is processed (etched,

doped, heated, etc.) to transform the patterns into the real transistors, wires,

and vias that connect the metal on different layers that make up the final

integrated circuit.

It is worth emphasizing that the manufacturing process doesn’t depend

on what is being manufactured. A computer printer doesn’t need to be

reconfigured depending on what you want to print, you just send it

different data. In the same way, a semiconductor manufacturing process

doesn’t depend on what the circuit is going to do.

The full details of the manufacturing process are obviously too complex to

go into here. The important thing to remember is that it doesn’t matter how

many transistors are on the die, or what the final product will be— all

transistors on a die are created at once and all die on the wafer are processed

very efficiently at the same time.

Where ICs are Made

The factories that make ICs are called fabs. Inside the fab is kept very

clean—a hospital operating theater is filthy by the standards of the “clean

22

rooms” in a fab. The air in the fab may be completely changed every few

seconds, as high-efficiency particulate air (HEPA) filters in the ceiling blow

air down and out through perforations in the floor before being filtered

and recirculated. In fact, recently, fabs have found that even that air is not

clean enough. Even a few random particles landing on a die can ruin it.

These days, the wafers being processed are contained in even cleaner boxes

that attach to each piece of manufacturing equipment in turn. A large part

of the cost of a fab is not the manufacturing equipment, expensive though

it is, but the equipment for keeping everything inside the fab clean.

Inside an Intel fab, a technician works in a clean suit, or

“bunny suit.” Image courtesy of Intel.

Why is cleanliness so important? The transistors on a modern

integrated circuit are 20 nanometers (nm) across. There are 1 million

nanometers in a millimeter. By contrast, a human hair is around 100,000

nm. Obviously, a hair ending up on a wafer would be a complete disaster,

blocking thousands of transistors from being manufactured correctly

and causing that die to fail. But it only takes something around 10 nm across

to fall on the wafer to cause a die to (probably) fail. If a die is not

manufactured correctly, it is simply thrown away. There is typically no

repair process to fix it after it’s made.

A modern fab is wildly expensive. One major company estimated a cost

of $10 billion dollars for the fab due to start construction in 2014. Since it

23

has a lifetime of perhaps 5 years, owning a fab costs around $50 per second,

and that’s before you buy any silicon or chemicals or design any chips.

Obviously, anyone owning a fab had better plan on making and selling a

lot of chips if they are going to make any money. That’s exactly what they

do: a modern fab manufactures over 50,000 dinner-plate sized wafers every

month.

Fabs were not always so expensive and until relatively recently, most

semiconductor companies owned their own fabs. In 1980 there were no

semiconductor companies that didn’t own their own fabs to manufacture

their own designs. However, the economics of fabs has completely changed

the semiconductor ecosystem over the last twenty years or so.

The model for semiconductor companies now is to outsource

manufacturing. Companies that do this are called ‘fabless’ and the

companies that manufacture their ICs are called foundries. This change in

the semiconductor ecosystem is a recurring theme of this book and has

been essential to the success of the semiconductor industry.

Business Models from Fab to Fabless

The first step that led to the outsourcing of manufacturing was when

companies began sharing their in-house fabs with other companies. A

company with a large fab would have excess capacity at times. To keep the

lines busy, they sold that capacity to other companies who needed more.

Then, in the early 1980s, a new type of semiconductor company formed

that specialized in helping systems companies design just the right chip for

their application, as opposed to buying standard ICs off the shelf. These

new companies would supply the knowledge of physical chip design and

also manufacture the chips (or have them manufactured) and ship them

back to the systems companies. These chips were known as application

specific integrated circuits or ASICs (although the less catchy term

“customer specific integrated circuits” would have been more accurate).

The ASIC model allowed companies to design custom integrated circuits

without having to maintain the infrastructure of a fab.

24

By the mid-1980s, more companies started making specialized ICs, but

without investing in their own fabs. Instead, these companies would

purchase excess foundry capacity from other fabs. These companies came

to be called, for obvious reasons, fabless semiconductor companies. This

was when semiconductor companies with fabs became known as IDMs

(integrated device manufacturer), to distinguish them from the fabless

companies.

In 1987, another new breed of semiconductor company was created: the

pure-play foundry. A pure-play foundry only manufactures ICs for other

companies who are either fabless or had limited capacity in their own fabs.

They do not design semiconductor products themselves. Before the foundry

business came along, getting a semiconductor company off the ground

was difficult and expensive. Building a fab was expensive, and starting a

fabless semiconductor company required a complicated negotiation for

excess foundry capacity at a friendly IDM. Once foundries arrived, the cost

and the risk of entering the semiconductor market lowered drastically. The

result? A surge of new fabless semiconductor companies in the 1990s, many

funded by Silicon Valley venture capitalists to address the growing markets

for computer graphics, networking chips, and wireless phone chips.

The move to a fabless model wasn’t universally hailed as a good idea. Jerry

Sanders, the co-founder and long-time CEO of Advanced Micro Devices

(AMD), famously noted in the late 1980s as the fabless revolution was getting

underway, that, “Real men have fabs.” What he meant was that design and

process needed to be tightly coupled. Because AMD was competing with

Intel in the microprocessor business, this statement was possibly true for

his business. It turned out not to be true for many businesses.

Over time, another change happened. As the specialized knowledge

about how to design integrated circuits gradually spread, many systems

companies stopped using the ASIC companies in favor of doing their

designs in-house. By the 1990s, many systems companies had very large

integrated circuit design teams and the ASIC companies gradually started

25

selling more and more of their own products until they became, in effect,

IDMs.

As fabs got more expensive, more IDMs (like Texas Instruments and

AMD) also chose the fabless model. Some switched to being completely

fabless, others kept their own older fabs and used the third-party foundry

for the most advanced ICs. This was known as fab-lite.

This is the landscape today. There are a few IDMs such as Intel who

design almost all of their own chips and build them in their own fabs. There

are foundries who design no chips, they only manufacture them for other

companies. Then there are fabless semiconductor companies such as Xilinx

and Qualcomm along with their fab-lite brethren such as Texas

Instruments, who design their own chips, sell their own products, but use

foundries for all or part of their manufacturing.

Along the way, there have been other players that helped bring

semiconductor technology and business to the current state. One is called

electronic design automation (EDA), which is the specialized software that’s

needed to design ICs. This software was once developed in house by each

semiconductor company, but was later outsourced. The same is true for the

components that go onto many ICs, or systems-on-chips (SoCs).

Semiconductor companies once had to make all the components that went

on their chips themselves, or have them custom made by another company.

Now there is a robust market for licensing a wide variety of off-the-shelf

functions to put on chips. These include things like A/D converters,

memory, and processors, and are collectively known as silicon intellectual

property, or IP.

From the IC to the iPad

With this basic history of the transistor, we can look at the changes in the

semiconductor business and technology through the years and see how

we’ve arrived at the current state of the industry. The chapters of this book

cover the main story arc of the semiconductor industry:

26

• Genesis: the invention of the transistor and the integrated circuit

• The first major transition from off-the-shelf components to

ASICs

• The second major transition from owning fabs to the fabless model

• The growth of EDA: selling the software that makes it all work

• The role of IP: selling the building blocks for chips

• The future: industry luminaries look to what comes next

Each main topic is presented in a chapter that explores the history and

key technologies. Each chapter is punctuated by sections that were

contributed by the leading companies in the fabless semiconductor

landscape today. They explain in their own words their history and role in

the larger ecosystem. The last chapter of the book passages from industry

luminaries who share their vision of what will take the semiconductor

industry to the next level of innovation and financial success. Our hope is

that this combination of objective and subjective histories is both

informative and entertaining.

27

Chapter 2: The ASIC Business

Before the 1980s, ICs contained a limited number of transistors and were

designed and created by the traditional semiconductor companies like

Fairchild and Texas Instruments. The chips were generic; basic building

blocks that everyone bought and made into products. However, by the

early 1980s, as semiconductor technology reached a point where much

more functionality could be fit onto a single chip, the people who made

electronics products began to search for new ways to stand out from the

competition. They wanted ICs that were differentiated from the

competition, and that were tuned to work specifically in their products.

This drove the development of a new type of chip, the application-specific

integrated circuit or ASIC, and a new business model that drastically

changed the layout of the semiconductor industry.

Traditional Semiconductor Business Stalls

The business model of semiconductor companies from the beginning of

the IC until the 1980s was to imagine what the market needed, create it,

manufacture it and then sell it on the open market to multiple customers.

As electronic products became more sophisticated, their customers wanted

chips that more specifically met their needs, rather than the generic chips that

were available to everyone. This was something the traditional

semiconductor companies were not equipped to provide for both business

and technical reasons. On the technical end, semiconductor companies

knew a lot about semiconductors, but they lacked system knowledge, and

28

so were unable to design specific ICs for every market segment. On the

business side, providing more versions of their products would increase

design overhead costs and reduce the advantages of manufacturing huge

volumes of a limited number of products. The systems companies, on the

other hand, knew exactly what they wanted to build but didn’t have enough

semiconductor knowledge to create their own chips and didn’t have the

means to manufacture those chips even if they could design them. The

systems companies needed a new way of doing chip design.

The ASIC Business Blooms

There was clearly a new niche forming for a business that could figure

out how to create custom ICs for systems companies. Two companies in

particular, VLSI Technology and LSI Logic, pioneered this new ASIC

business. They both applied deep knowledge of semiconductor design and

manufacturing to a business model that consisted largely of building other

people’s chips. What emerged was a model in which the systems companies

did the early part of the design (called front-end) that specifies the exact

functionality they want, then handed the physical design (called back-end)

and manufacturing responsibilities to the ASIC company.

While it was initially thought of as a terrible business to be in—high

engineering costs and few customers—the advantages to this new model

became evident and the new ASIC companies did very well. LSI Logic, for

example, reported revenues of $2.75 billion by 2000.

The new ASIC model set the stage for a cascade of changes to the

semiconductor industry. For example, the budding electronic design

automation companies took note of this new market. They realized that

their design automation systems used for printed circuit boards could also

be used for the front-end steps of ASIC design too.

How the ASIC Design Model Works

ASIC design typically worked like this: A systems company, typically one

building an add-on board for the PC market that was the big driver of

29

electronics in that era, would come up with some idea for a new chip. They

would negotiate with several ASIC companies and choose one to work with

even though they only had a vague idea of the size of the design at that

point. The chosen ASIC company would supply them with a library of basic

building blocks called standard cells.

The systems company would use a software tool called a schematic editor

to create the design, picking the cells they wanted from the library and

deciding how those cells should be connected. The output from this

process is called a netlist, essentially a list of cells and connections.

Just like writing software or writing a book, the first draft of the design

would be full of errors. But with semiconductor technology, it isn’t possible

to build the part and see what the errors are. Even back then,

manufacturing the first chip, known as the prototype, could cost tens of

thousands of dollars and take a couple of months. Also unlike book writing,

it’s not possible to simply proofread or inspect the schematic; too many

errors would still slip through.

Instead, designers simulated the function of the design with software. A

flight simulator tells a pilot what would happen if he or she moves the

controls a certain way, and there is no cost to crashing in the simulation. In

the same way, a simulation of the design checked how it behaved given

certain inputs without requiring the expense of building the chip. Errors

detected through simulation could be fixed and the simulation could be run

again until no more errors remained.

When the design was finally determined to be functionally correct, the

netlist was sent from the systems company to the ASIC company for the

next step. Using a software program that placed the standard cells and

wired them together (known as place & route), the netlist would be

converted to a physical layout. The netlist is a list of cells and connections,

something like an architectural spec that says which room connects to

which and by how many doors; the output of place & route adds the

30

physical locations and specific wire routing, analogous to a completed

house blueprint.

In addition to creating the actual layout that will be manufactured, this

process also created a detailed account of timing—how long every signal

takes as it travels from its source to the transistor it switches on or off. This

detailed timing was sent back to the systems company for a final simulation

to ensure that everything still worked.

After the design passed final simulation, the systems company took a

deep breath and gave the go-ahead to manufacture prototypes of the chip. At

the time, all the design data needed to make the photomask was written onto

a computer tape, so the process was, and still is, called tape-out.

The ASIC company then had the masks made that were needed to run

the design through their fab. Chips were manufactured using one of two

main ASIC technologies; gate-array or cell-based. In a gate-array design, the

gates—a group of transistors that perform a function—were pre-fabricated

on a wafer (the gate-array “base”) so the masks only pattern the

interconnect. In cell-based design, masks were required to pattern all layers

on a blank wafer. The gate-array approach was faster and cheaper, but less

flexible. It was faster, because there were fewer masks to make and fewer

layers to be manufactured. It was cheaper, because the gate-array bases

were mass produced in higher volume than any individual design would be.

However, gate-array substrates only came in certain fixed sizes, and so the

designs often left many potential gates unused.

In a couple of months, the prototypes would be finished and samples

shipped back to the systems company. These parts would then be

incorporated into complete systems and those systems tested. For example,

if the chip went into an add-in board for a PC, a few boards would then be

manufactured, put into a PC, and checked for correct operation.

At the end of that process, the systems company took another deep

breath and placed an order with the ASIC company for volume

manufacturing; requesting thousands, or possibly even millions, of chips.

31

They would receive these a few months later, build them into their own

products, and ship those products to market. The final step in this journey

was the day we, the consumers, brought home our very own personal

computer or CD player.

The Lasting Effect of the ASIC Model

All semiconductor companies were caught up in ASIC in some way or

another because of the basic economics. Semiconductor technology

allowed systems companies to make medium-sized designs, and medium-

sized designs were pretty much all different. The technology didn’t yet

allow whole systems to be put on a single chip. This meant that

semiconductor companies could no longer survive by just supplying basic

building-block chips because those were largely being superseded by ASIC

chips. But they also couldn’t build whole systems like a PC, a television, or

a CD player because semiconductor technology did not allow for that level

of integration. Eventually, most semiconductor companies, including

Panasonic, Fujitsu, and Intel, joined the ASIC business, thus making the

market very competitive.

Although the ASIC business model filled an important niche in the

development of electronic products, it turned out to be a difficult business in

which to make money. The systems company owned the specialized

knowledge of what was in the chip, so the semiconductor company could

not price to value. The systems company also knew the size of the chip and

thus roughly what it should cost to make. The best money for ASIC

companies turned out to be making the largest, most difficult designs. It

took more expertise to successfully complete the physical design of these

big designs, so the leading ASIC companies, VLSI Technology and LSI

Logic, could charge premium pricing based on their ability to complete the

most challenging designs on schedule. If you are building a sky-scraper you

don’t go with a company that has only built houses.

ASIC companies had few designs they could make money on, and it

gradually became obvious just how unprofitable low-volume designs were.

32

All the ASIC companies realized that there were less than a hundred

designs a year that were really worth winning, and competition to win those

became fierce.

During this time, semiconductor technology continued to advance and it

became possible to build whole systems (or large parts of them) on a single

integrated circuit. These were known as systems-on-chip, or SoCs. The

ASIC companies started to build and sell whole systems, such as chipsets

for PCs or cellphones much like the traditional semiconductor model,

alongside their traditional ASIC business. This made all semiconductor

companies start to look the same, with lines of standard products and,

often, an ASIC product line too.

One important aspect of the ASIC model was that the “tooling,” the

industry word for the photomasks, belonged to the ASIC company. This

meant that any given design could only be manufactured by its specific

ASIC company. Even if another semiconductor company offered them a

great deal to manufacture a completed design, the systems company

couldn’t just hand over the masks made by a previous ASIC company. This

became very important in the next phase of what ASIC would morph into:

design services.

ASIC design required a network of design centers all over the world

staffed with some of the best designers available, obviously an expensive

proposition. Their customers started to resent paying the premium to

support this infrastructure, especially on very high volume designs. While

the systems companies could shop around for a better price, switching

vendors was costly because it meant starting the design all over again with

the new semiconductor supplier.

Eventually both VLSI Technology and LSI Logic would be acquired.

VLSI was bought by NXP (still then called Philips Semiconductors) in 1999

for close to $1 billion. LSI Logic, which left the ASIC business and was

renamed LSI Corporation, was acquired by Avago in late 2013 for $6.6

billion.

33

The ASIC Model Morphs into Design Services

By the early 1990s, in addition to the high cost of the ASIC model, two

other things had changed that spelled the beginning of the end for the ASIC

business. One was that foundries such as TSMC had come into existence.

The second is that the knowledge of how to do physical design became

more widespread and at least partially encapsulated in software tools

available from the EDA industry. These changes gave systems companies

a new route to silicon that bypassed the ASIC companies completely.

Systems companies could now feasibly complete the entire design

themselves, including the physical design, and then use a foundry like

TSMC to manufacture it. This was known as customer-owned-tooling or

COT, because the systems company, not the ASIC company or the foundry,

owned the whole design from concept to masks. If one foundry gave poor

pricing, the systems company could transfer the design to a different

manufacturer without having to completely redesign the chip.

However, the COT approach was not without its challenges. Doing

physical design of a chip is not a simple task. Many systems companies

underestimated the value of the premium charged by ASIC companies for

their expertise, and they struggled to complete designs on their own

without that support. As a result, a new breed of companies, known as

design services companies, emerged to meet this exploding demand for

support.

Design service companies played a similar role as the ASIC companies;

providing specialized semiconductor design knowledge to the systems

companies. In some cases, they would do the entire design, which is called

turn-key design. More often, they would do all or some of the physical

design and sometimes manage the interface with the foundry to oversee

the manufacturing process, another area where systems companies lacked

experience. One company in particular, Global Unichip, operates with a

business model identical to the old ASIC companies except in one

respect—it has no fab. It uses a foundry, primarily TSMC, to build all of

their customers’ products.

34

This is the layout of the ASIC landscape today: there is very limited ASIC

business conducted by a few semiconductor companies. There are design

services companies and virtual ASIC companies like Global Unichip and

eSilicon. There are no pure-play ASIC companies. A lot of IC functions

that were once implemented as ASIC are now mostly done as field-

programmable gate arrays, or FPGA, which is important enough to need a

chapter of its own. The next main chapter, in fact, is an exploration of

FPGAs. But first, a brief history of one of the companies that created the

ASIC business model, VLSI Technology, and one of the new breed of

design services companies, eSilicon.

35

In Their Own Words: VLSI

Technology

As one of the companies that founded the ASIC business

model, VLSI Technology helped set the course of the entire

semiconductor industry. The company is no longer in business,

but one of their early and long-time employees, and co-author

of this book Paul McLellan, has written this history of

VLSI Technology.

VLSI Technology was founded in 1979 by Dan Floyd, Jack Baletto and

Gunnar Wetlesen, who had worked together at the semiconductor

company Signetics. The initial investments in VLSI Technology were from

Hambrecht and Quist, a cross between a VC and a bank, and by Evans and

Sutherland, the simulation/graphics company. Semiconductor technology

had reached a point that significant systems or parts of systems could be

manufactured, and the original business plan was to build a fab to

manufacture parts that other people would design.

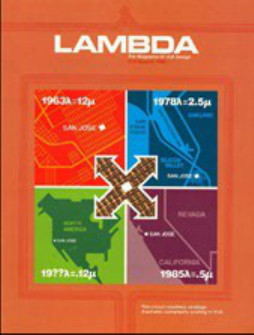

The fourth person to join the company, in 1980,

was Doug Fairbairn. He was working at Xerox Palo

Alto Research Center (PARC) and had started a fledgling publication on

very-large-scale integration (VLSI) design, Lambda Magazine. He went to

interview the three founders for an article, but was intrigued by the new

company.

36

He immediately realized that their plans for a foundry wouldn’t really

work without a new generation of design automation tools. Existing design

tools of that era were polygon-based layout editors, but semiconductor

technology was already past the point where you could reasonably design

everything by hand. Doug decided to take the opportunity to move from

the research environment into industry and create the first development

group creating the next generation of software for integrated circuit design.

In the first few years of VLSI Technology, the company was sustained

by designing ROMs (read-only memories) for the first generation of video

game consoles, which were all cartridge-based. Each cartridge actually

contained a ROM with the video-game binary programmed into it. The fab

in San Jose was not yet in high volume manufacturing, and so these were

actually outsourced to Rohm in Osaka, Japan. In parallel, Fairbairn hired a

group of PhDs, many from Carver Mead’s Silicon Structures Project at

CalTech, and the profits from video games were invested in a suite of tools

for what we would now call ASIC design, although that name didn’t come

until later.

Lambda Magazine.

VLSI Technology (hereafter simply VLSI) had a fab on McKay Drive in

San Jose. At the time, it was the only high-tech building in the area,

surrounded by greenhouses growing flowers and, across the street, the

37

Chrysanthemum Growers Association Hall that was sometimes used for

company-wide meetings. The first process brought up was 3 µm HMOS,

followed by 2 µm CMOS and 1.5 µm CMOS.

Fairly early on, the investors decided that the company’s management

team was too inexperienced to manage the anticipated growth. Al Stein was

brought in as CEO. The company went public in February 1983, still not

yet profitable, and almost immediately afterward, the three original

founders departed.

The initial design technology still based largely on the Caltech/PARC

ideas in Mead and Conway’s seminal book, Introduction to VLSI Design, was

a mixture of manual design with generators for basic structures, such as

registers and adders, using an internal language called VIP. The focus of the

tools was on verification, with a design rule checker (DRC), a circuit

extractor, a layout-versus-schematic (LVS) checker, called net compare, and

simulators—VSIM, with no timing and then TSIM, which had timing-based

on a simple capacitive model.

However, designs were getting too large for this approach and despite

the inelegance compared to Mead and Conway’s ideas, it was clear that

layout tasks had to become much more automated. This fact led them to

develop standard cell libraries and a full place and route system to

complement their existing schematic capture software.

In order to be successful, the design work had to get closer to the

customer. Initially this meant that the customer came to VLSI, and there

were several teams of customer’s designers working on site at VLSI’s San

Jose buildings. For example, the main chip in France Telecom’s initial

implementation of the online service, Minitel, was created by Telic (now

buried somewhere in Alcatel-Lucent) who sent a team of engineers from

Strasburg, Germany to San Jose who took up residence for several months.

The next step was to create a network of design centers initially in the

US, and then also in Japan and Europe, since it was clearly not scalable to

bring all the customers on-site to California.

38

VLSI also opened a research and development site at Sophia Antipolis in

the south of France. They started doing design tool development and

library development, and also served as a hub of expertise to support the

growing European business.

The IBM PC was then in its high growth phase and many customers of

VLSI were designing products for that market (modems, add-in peripheral

cards) or designing chips to create PC clones. In fact, VLSI had dozens of

customers making products for the PC market. To serve these customers,

VLSI developed the first of what today is called semiconductor IP,

although VLSI called them megacells (and later functional system blocks

or FSBs). These included all the standard components in a PC such as the

UARTs or the 6845 graphics controller.

Two key design automation products that VLSI pioneered in the late

1980s were the datapath compiler and the state-machine compiler, which

was effectively one of the first synthesis tools. The datapath compiler could

take a complex description for a datapath and quickly generate a fully laid

out datapath on silicon, using its own optimized custom library, not

standard cells. And the state-machine compiler could take a description of

a state machine (or just any old logic) and produce an optimized

implementation in standard cells. Together these two tools made creating

complex designs much easier.

VLSI saw robust growth in the 1980s, but it never made enough cash to

fund all the investment required for process technology development and

capital investment for a next-generation fab. They also had several false

starts. They entered and then exited the SRAM (static memory) business.

They entered and then exited a partnership to build a fab in Malaysia. They

had a partnership with Philips Semiconductors licensing process

technology that was never used.

In the late 1980s, they entered into a strategic partnership with Hitachi

in which Hitachi gained access to VLSI’s design tools and Hitachi licensed

VLSI its 1 µm process technology and made significant cash investment.

39

This meant that VLSI could bring up a competitive 1 µm technology at its

second fab in San Antonio, TX. Eventually the two fabs were upgraded

from 5” to 8” wafers.

Development of the Chip Set

VLSI had already developed several megacells as IP for use in PCs. A

group of five engineers conducted an experiment with these megacells over

a weekend that involved putting all of them together onto a few chips. This

was the first PC chipset, which could be used to create a full PC with only

the addition of the Intel microprocessor and memory. VLSI ran with the

idea and built up a large business in PC chipset standard products to go

with its mainline ASIC business.

The PC chipset business was very successful and was dominated by VLSI

in the early 1990s. One generation of chipsets was even resold by Intel.

However it was clear that it would eventually become a low-margin business

due to competition from Asia, and probably would finally be owned by

Intel who could design more and more functionality to work intimately with

its own next-generation microprocessors. VLSI decided to invest in system

knowledge for the GSM cellular standard that was starting to get off the

ground, as well as some other attractive end markets such as digital video.

Also, in that era, around 1987, Apple decided to build the Newton

personal digital assistant. They selected Acorn’s RISC processor and

insisted it be spun out as a separate company. So, ARM was created with

Apple, Olivetti (that by then owned Acorn) and VLSI as the owners. VLSI

supplied all the design tools used to design the processors, and also

manufactured the initial parts. That story is told in more detail later in the

book.

Meanwhile, the market for second generation (digital) GSM phones

exploded. European companies, especially Nokia and Ericsson, were the

most successful handset manufacturers. At one point Ericsson was

accounted for 40% of VLSI’s entire business. VLSI also started a major

investment at its French site to develop its own GSM baseband chips. They

40

built this up into a chipset business selling to second-tier manufacturers

who didn’t have enough system knowledge to develop GSM baseband

chips internally. They later licensed CDMA wireless technology from

Qualcomm and started to develop a CDMA product line primarily for the

US market. Between the standard product business and the large volume

of ASIC business, especially with Ericsson, the communication segment was

over half of VLSI’s semiconductor business.

By 1991, it was clear that VLSI was really two companies that should

already have separated: an EDA company with some of the best VLSI

design tools on the market, and an ASIC/ASSP company with a network

of design centers and two fabs manufacturing silicon. In 1991, the design

tool business was spun out to a new company called Compass Design

Automation, leaving VLSI Technology as a pure semiconductor business

(with Compass as one of their EDA suppliers).

Compass struggled to shake off the perception that it wasn’t really

independent of VLSI and as a result it had only a small ecosystem of

semiconductor companies that fully supported it with ASIC libraries. But

Compass also had its own portfolio of libraries, originally developed for

VLSI’s ASIC business. By creating standardized design rules (called

Passport) that worked in almost all fabs, it created the first library business

with a portfolio of standard cells, memory compilers, the datapath

compiler, and other foundation IP. This was very successful and grew to be

about 30% of Compass’s business.

Compass increased to nearly $60 million in revenue but it was never

profitable. They had a fully integrated suite of design tools in an era when

the large EDA companies, which had grown through acquisition, had

educated the market to pick best-in-class point tools and use their internal

CAD departments to integrate them. So Compass was swimming against

the tide and despite the fact that every ASIC and every standard product

made by VSLI was designed exclusively using Compass tools and libraries,

they never shook off the perception that they were not leading edge. CAD

41

groups were reluctant to standardize on Compass, at least partially because

they would have much less design tool integrating to do.

In 1997, Compass was sold for $44 million to Avant!, which was mostly

interested in the library business to complement their own software

business. Of course, Avant! in turn was acquired by Synopsys in 2001 (for

$830 million). The software part of the business, as opposed to the library

development, by then was largely based in France and the entire group in

France was hired by Cadence en-masse where many of the individual

engineers still work today. The library business was largely in California and

was integrated into Avant!

VLSI’s semiconductor business, both the ASIC business and the ASSP

business grew through the 1990s to about $600 million in revenue. There

was a focus on wireless, digital video, PC graphics and an ASIC business

that was diversified into many separate segments.

In 1999, Philips Semiconductors (now called NXP) made a hostile

takeover bid for VLSI Technology. Philips had struggled to bring processes

to market quickly along with the required libraries. As the ASIC business

got more and more consumer-oriented, this became a big problem because

of the very short product life-cycles. VLSI’s lifeblood was ASIC and they

were much quicker at getting designs going in new process generations, so

Philips figured that acquiring VLSI would shake up their internal processes

and also give them a network of leading design centers (by then renamed

technology centers). After some negotiation, VLSI was acquired by Philips

Semiconductors for just under a billion dollars and it ceased to be an

independent company.

43

In Their Own Words: eSilicon

Corporation

eSilicon was one of the first companies to focus on making

the benefits of the fabless semiconductor movement available

to a broader range of customers and markets. The company

is credited with the creation of the fabless ASIC model. In

this section, eSilicon shares some of its history and provides

its view of the ever-changing fabless business model.

eSilicon Corporation was founded in 2000 with Jack Harding as the

founding CEO and Seth Neiman of Crosspoint Venture Partners as the

first venture investor and outside board member. They both remain

involved in the company today, with Harding continuing as CEO and

Neiman now serving as Chairman of the Board.

Both Harding and Neiman brought important and complementary skills

to eSilicon that helped the company maneuver through some very

challenging times. Prior to eSilicon, Harding was President and CEO of

Cadence Design Systems, at the time the largest EDA supplier in the

industry. He assumed the leadership role at Cadence after its acquisition of

Cooper and Chyan Technology (CCT), where Harding was CEO. Prior to

CCT, Harding served as Executive Vice President of Zycad Corporation, a

specialty EDA hardware supplier. He began his career at IBM.

Seth Neiman is Co-Managing Partner at Crosspoint Venture Partners,

where he has been an active investor since 1994. Neiman’s investments

include Brocade, Foundry, Juniper and Avanex among many others. Prior

44

to joining Crosspoint, Neiman was an engineering and strategic product

executive at a number of successful startups including Dahlgren Control

Systems, Coactive Computing, and the TOPS division of Sun

Microsystems. Neiman was the lead investor in eSilicon and incubated the

company with Jack at the dawn of the Pleistocene epoch.

The Early Years

eSilicon’s original vision was to develop an online environment where

members of the globally disaggregated fabless semiconductor supply chain

could collaborate with end customers looking to re-aggregate their services.

The idea was straight-forward—bring semiconductor suppliers and

consumers together and use the global reach of the Internet to facilitate a

marketplace where consumers could configure a supply chain online. The

resultant offering would simplify access to complex technology and reduce

the risk associated with complex design decisions. Many fabless enterprises

had struggled with these issues, taking weeks to months to develop a

complete plan for the implementation of a new custom chip. Chip die size

and cost estimates were difficult to develop, technology choices were varied

and somewhat confusing, and contractual commitments from supply chain

members took many iterations and often required a team of lawyers to

complete.

The original vision was simple, elegant and sorely needed. However, it

proved to be anything but simple to implement. In the very early days of

the company’s existence, two things happened that caused a shift in

strategy. First, a close look at the technical solutions required to create a

truly automated marketplace yielded significant challenges. Soon after the

formation of the company, eSilicon hired a group of very talented

individuals who did their original research and development work at Bell

Labs. This team had broad knowledge of all aspects of semiconductor

design. It was this team’s detailed analysis that lead to a better

understanding of the challenges that were ahead.

45

Second, a worldwide collapse of the Internet economy occurred soon

after the company was founded. The “bursting” of the Internet bubble

created substantial chaos for many companies. For eSilicon, it meant that a

reliable way to monetize its vision would be challenging, even if the

company could solve the substantial technical issues it faced. As a result,

most of the original vision was put on the shelf. The complete realization

of the “e” in eSilicon would have to wait for another day. All was not lost

in the transition, however. Business process automation and worldwide

supply chain relationships did foster the development of a unique

information backbone that the company leverages even today. More on

that later.

The Fabless ASIC Model

Mounting technical challenges and an economic collapse of the target

market have killed many companies. Things didn’t turn out that way at

eSilicon. Thanks to a very strong early team, visionary leadership and a little

luck, the company was able to redirect its efforts into a new, mainstream

business model. It was clear from the beginning that re-aggregating the

worldwide semiconductor supply chain was going to require a broad range

of skills. Certainly, design skills would be needed. But back-end

manufacturing knowledge was also going to be critical. Everything from

package design, test program development, early prototype validation,

volume manufacturing ramp, yield optimization, life testing, and failure

analysis would be needed to deliver a complete solution. Relationships with

all the supply chain members would be required and that took a special

kind of person with a special kind of network.

eSilicon assembled all these skill sets. That deep domain expertise and

broad supply chain network allowed the company to pioneer the fabless

ASIC model. The concept was simple—provide the complete, design-to-

manufacturing services provided by the current conventional ASIC

suppliers, such as LSI Logic, but do it by leveraging a global and outsourced

46

supply chain. Customers would no longer be limited to the fab that their

ASIC supplier owned, or their cell libraries and design methodology.

Instead, a supply chain could be configured that optimally served the

customer’s needs. And eSilicon’s design and manufacturing skills and

supply chain network would deliver the final chip. The volume purchasing

leverage that eSilicon would build, coupled with the significant learning

eSilicon would achieve by addressing advanced design and manufacturing

problems on a daily basis would create a best-in-class experience for

eSilicon’s customers.

eSilicon’s positioning, DAC 2000

As the company launched in the fall of 2000, the fabless ASIC segment

of the semiconductor market was born. Gartner/ Dataquest began

coverage of this new and growing business segment. Many new fabless

ASIC companies followed. Antara.net was eSilicon’s first customer. The

company produced a custom chip that would generate real-world network

traffic to allow stress-testing of e-business sites before they went live.

Technology nodes were in the 180 nm to 130 nm range and between

eSilicon’s launch in 2000 and 2004, 37 designs were taped out and over 14

million chips were shipped.

Fabless ASIC was an adequate description for the business model as

everyone knew what an ASIC was, but the description fell short. A

managed outsourced model could be applied to many chip projects, both

standard and custom. As a result, eSilicon coined the term Vertical Service

47

Provider (VSP), and that term was used during the company’s initial public

exposure at the Design Automation Conference (DAC) in 2000.

The model worked. eSilicon achieved a fair amount of notoriety in the

early days as the supplier of the system chip that powered the original iPod

for Apple. The company also provided silicon for 2Wire, a company that

delivered residential Internet gateways and associated services for providers

such as AT&T. But it wasn’t only the delivery of “rock star” silicon that set

the company apart; some of the original e-business vision of eSilicon did

survive.

The company launched a work-in-process (WIP) management and

logistics tracking system dubbed eSilicon Access® during its first few years.

The company received a total of four patents for this technology between

2004 and 2010. eSilicon Access, for the first time, put the worldwide supply

chain on the desktop of all eSilicon’s customers. Using this system, any

customer could determine the status of its orders in the manufacturing

process and receive alerts when the status changed. eSilicon uses this same

technology to automate its internal business operations today.

Growing the Business

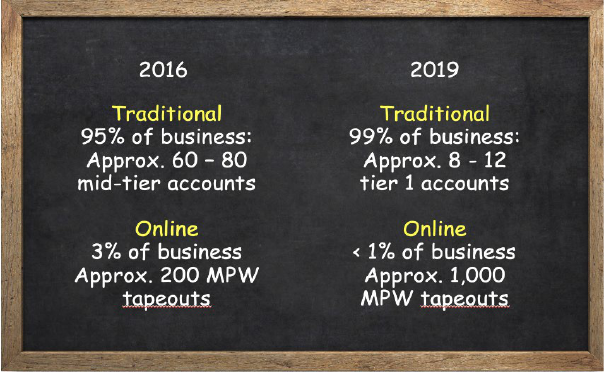

During the next phase of growth for the company, from 2005 to 2009,